F5 BIG-IP LTM has many load balancing algorithms that we will discuss and compare in this section.

Are they static or dynamic? What is the difference between health monitor and performance monitor? What are the requirements for implementing a load balancing algorithm? Which load balancing algorithm is best for my application? These are the questions we will answer in this section.

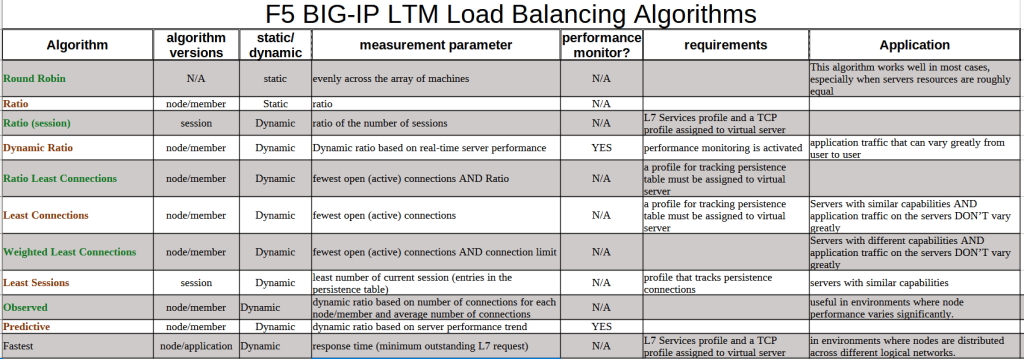

F5 BIG-IP LTM Load Balancing Algorithms

In the previous sections we have discussed and implemented BIG-IP LTM round robin and ratio load balancing algorithm.

In this section we are going to compare different load balancing algorithms supported by BIG-IP LTM.

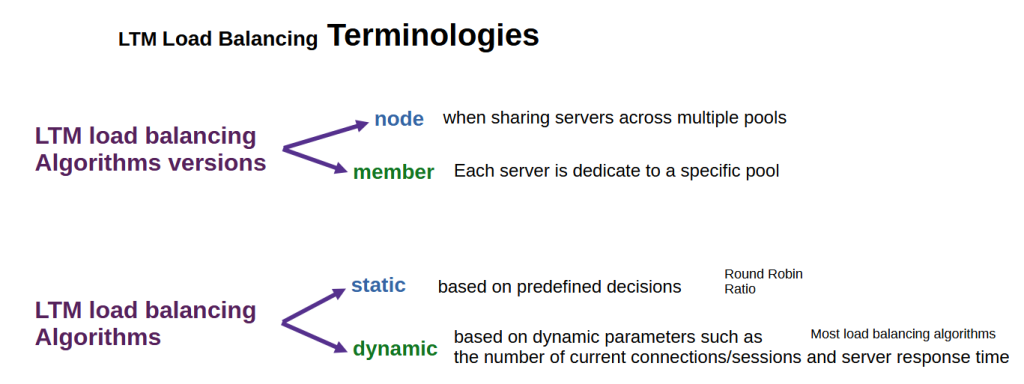

LTM Load Balancing terminology

Before introducing and comparing LTM load balancing algorithms, we will first discuss some terminology.

Most of load balancing algorithms have two versions: “node” and “member”.

Typically we use the “node” version of the load balancing algorithm when sharing servers across multiple pools, otherwise the “member” version of the algorithm is used.

And also most of load balancing algorithms are “dynamic”, meaning that the load balancing decision is made based on dynamic parameters, particularly the number of current connections/sessions to each server and server response time.

In “static” load balancing algorithm, load balancing is done based on predefined decisions such as Round-Robin, which distributes requests evenly across the array of machines, or Ratio, which distributes requests based on the ratio weight that we manually assign to each server node or pool member.

Both round-robin and ratio methods are discussed and implemented in the previous sections.

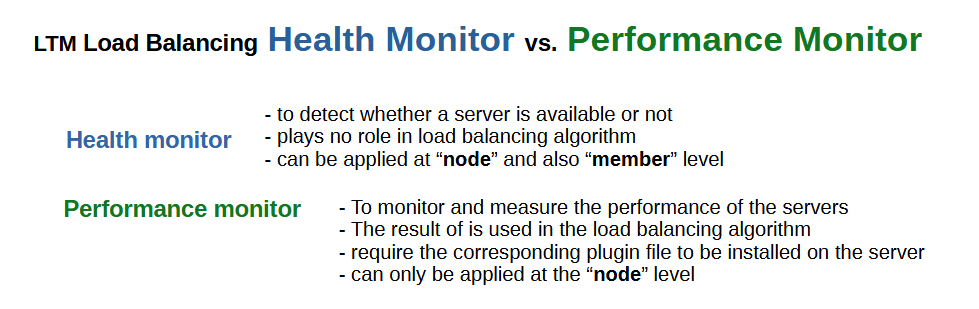

health monitor versus performance monitor

The other terminologies we notice when it comes to load balancing algorithms are “health monitor” and “performance monitor”.

The role of the “health monitor” is to detect whether a server is available or not and of course traffic is forwarded to the server if it is available. The health monitor plays no role in load balancing algorithm.

Health monitor can be applied at “node” and also “member” level.

The role of the “performance monitor” is to monitor and measure the performance of the servers, such as measuring SNMP MIBs and the CPU and memory overload. The result of performance monitoring is used in the load balancing algorithm.

Performance monitor require the corresponding plugin file to be installed on the server.

The performance monitor can only be applied at the “node” level.

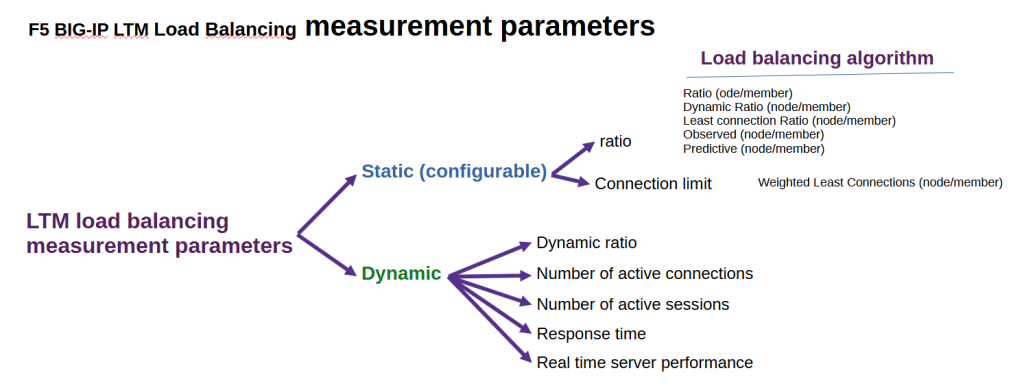

F5 LTM Load Balancing measurement parameters

Each load balancing algorithm has a measurement parameter or a set of measurement parameters that are used to decide which server to forward the next request to.

Some of these measurement parameters are configurable by the administrator and some of them are calculated dynamically.

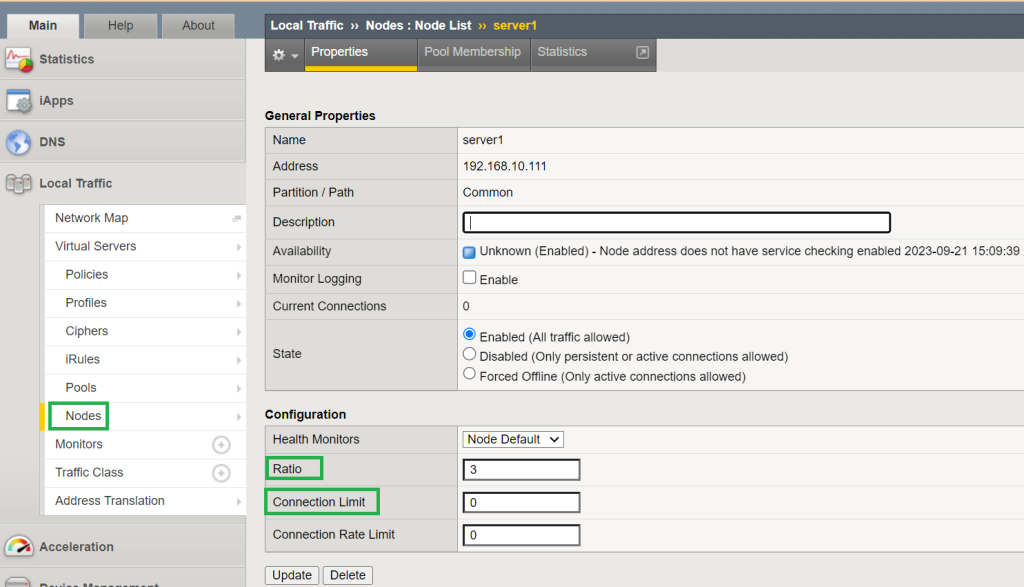

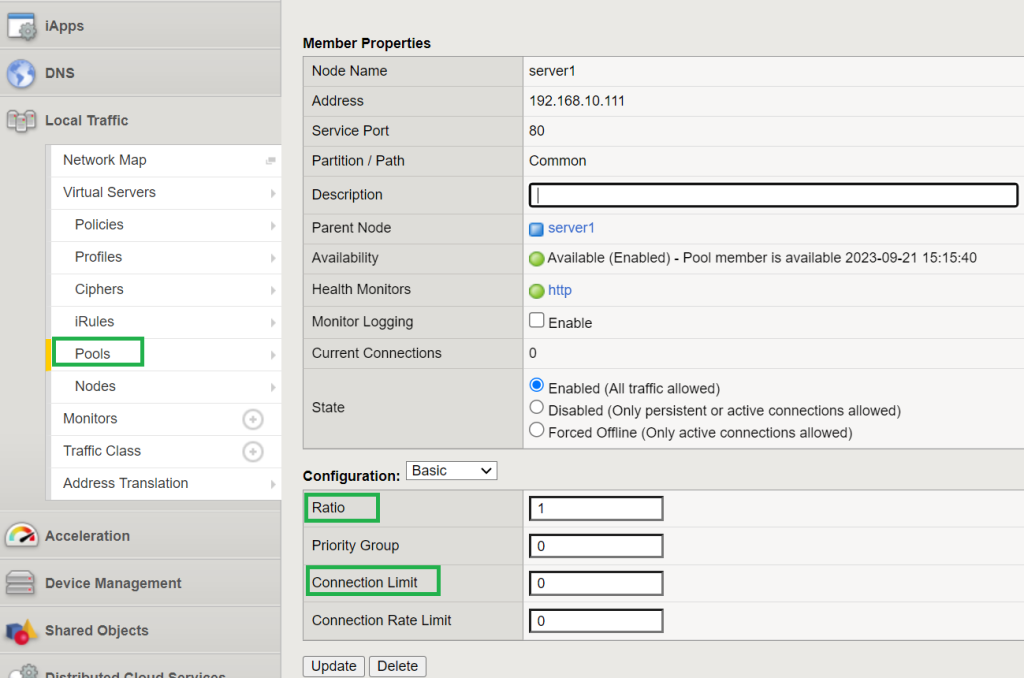

“Ratio” and “connection limit” are configurable and can be configured at both node level and pool member level.

ratio

The “ratio” is the number of connections forwarded to the server compared to other servers. For example, if the ratio of Server1 is 2 and the ratio of Server2 is 3, it means that out of every 5 connection requests, 2 will be forwarded to Server1 and 3 will be forwarded to Server2. Ratio is 1 by default.

The ratio is the parameter that can be configured by the administrator or dynamically calculated by some load balancing algorithms.

Many load balancing algorithms use static or dynamic ratio for the load balancing decision, or at least ratio is one of the parameters effective in the decision.

Algorithms like “Ratio (ode/member)“, “Dynamic Ratio (node/member)“, “Least connection Ratio (node/member)“, “Observed (node/member)” and “Predictive (node/member)” are using one of static or dynamic ratio for load balancing decision.

connection limit

The „connection limit“ parameter specifies the maximum number of new connections that can be forwarded to the server per second.

Connection limit is 0 by default which means there is no limitation.

Connection limit somehow also shows the capacity of the server. If the connection limit in Server1 is 100 and in Server2 is 200, it means that the capacity of Server2 can be twice that of Server1.

Algorithms whose names begin with “weighted“, such as “Weighted Least Connections (node)” and “Weighted Least Connections (member)“, use this parameter as a measure.

LTM Dynamic Measurement Parameters

in addition to static measurement parameters, there are also some parameters that are measured dynamically and are not configured by administrator.

“Dynamic ratio“, “number of open (active) connections“, “number of open (active) sessions“, “response time” and “server real time performance” are some other dynamic measurement parameters that are effective in load balancing decisions.

session vs. connection in LTM load balancing algorithms

The other point that you may notice in load balancing algorithms are the keywords, connection and session.

What is the difference between session and connection in load balancing measurement parameters? In summary, a session may contain multiple connections.

Most load balancing algorithms use dynamic measurement parameters to load balance requests.

In continue we explain how each algorithm uses one or more of the above measurement parameters to load balance the requests.

Compare LTM Load Balancing Algorithms

This is an overview of the LTM load balancing algorithm that answers these questions at a glance.

Different version of a each algorithm. Are they static or dynamic? The measurement parameters that BIG-IP uses to decide which server the next request will be forwarded to. For which algorithms, performance monitor need to be implemented? The requirements for using each algorithm and finally in which applications is each load balancing algorithm used?

In continue we discuss each of these load balancing algorithms in more detail.

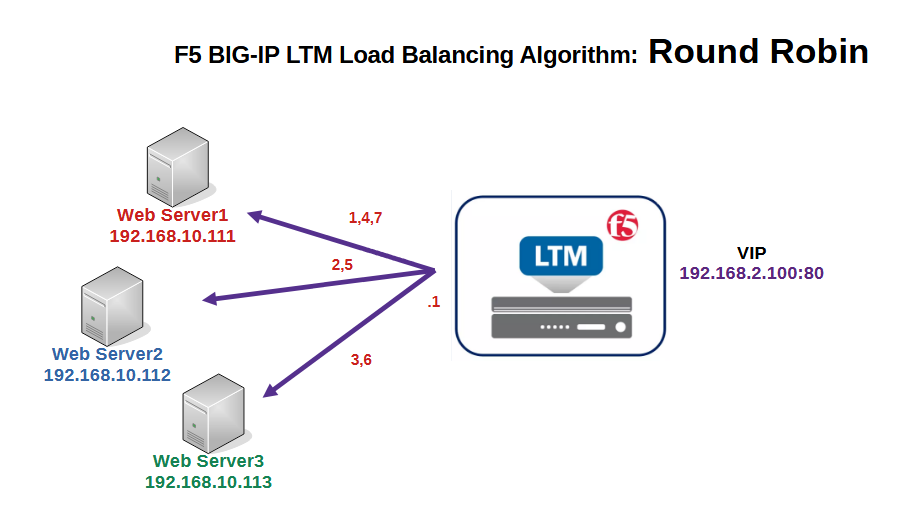

Round Robin:

Round Robin algorithm distributes the loads evenly among all pool members, which was discussed and implemented in the previous sections.

This algorithm works well in most cases, especially when servers resources are roughly equal.

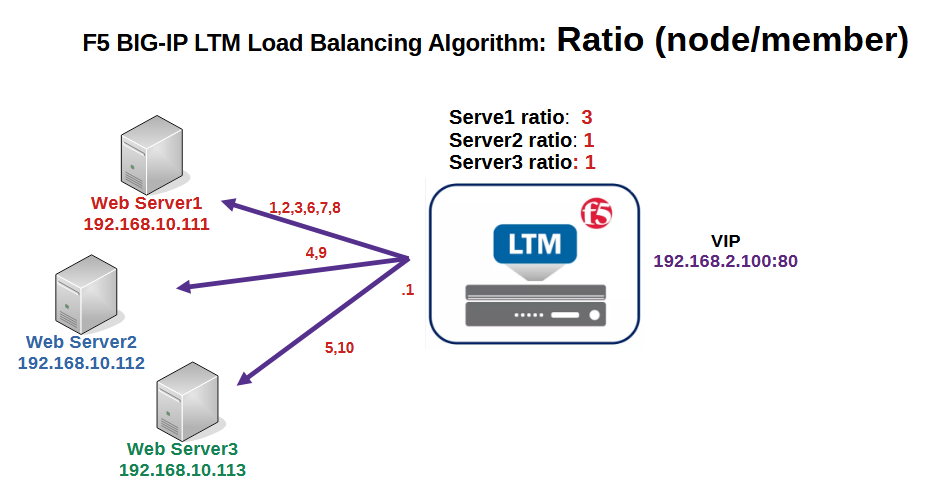

Ratio (node/member):

The ratio algorithm (node/member) was also discussed and implemented in the previous sections.

Like round-robin, this algorithm is also static and does not measure anything. Requests are distributed proportionally to the ratio configured in the node/member section. By default the ratio is one and it behaves like a round-robin algorithm.

In this figure, ratio of server1 has changed to 3 but ratio of server2 and server3 are 1, the default value.

In this example, requests 1, 2, and 3 are routed to Server1. Then requests 4 and 5 are forwarded to Server2 and Server3. Again, requests 6,7 and 8 are forwarded to Server1 and finally requests 9 and 10 are forwarded to Server2 and Server3.

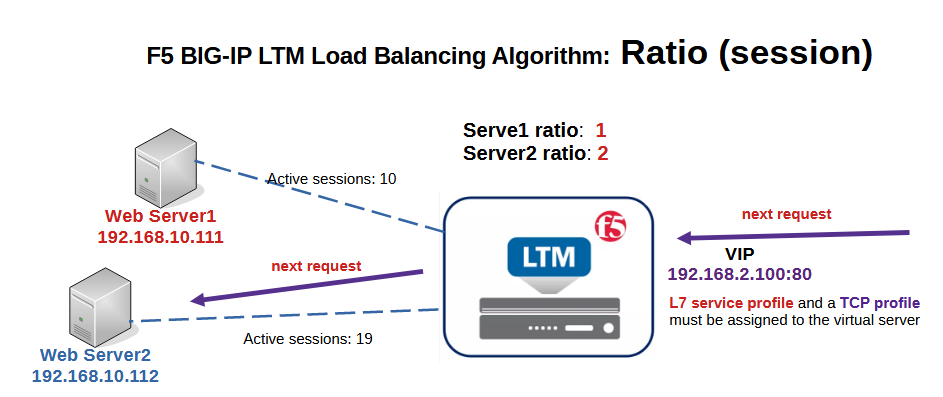

Ratio (Session):

With the ratio (session) algorithm, the new requests are distributed based on the number of open (active) sessions and the ratio configured for each pool member.

This algorithm is dynamic because it needs to measure and keep the number of active sessions for each server.

For example, if Server2’s ratio is twice the ratio configured by Server1 and Server1 has 10 active sessions and Server2 has less than 20 active sessions, the new request will be forwarded to Server2.

In the ratio (session) algorithm, it is required that an L7 service profile and a TCP profile be assigned to the virtual server which will be discussed in the next sections.

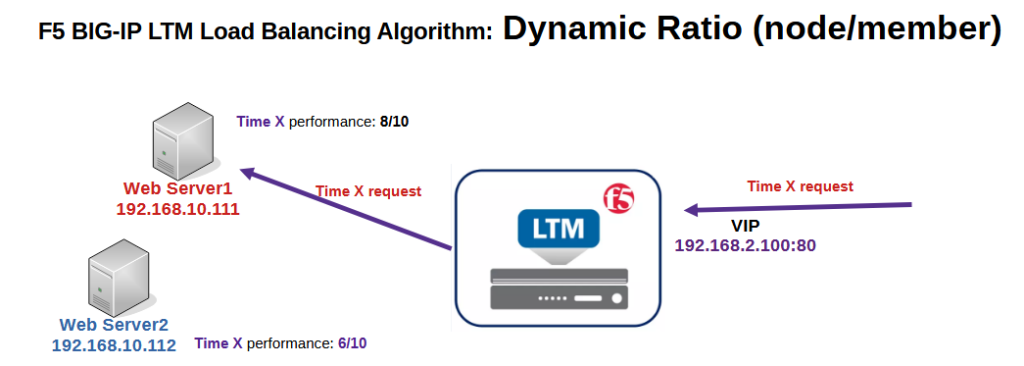

Dynamic Ratio (node/member):

The dynamic ratio algorithm works like the ratio algorithm and the loads of requests are distributed proportionally to the ratio of nodes or pool members. The difference is that the ratio is calculated dynamically based on the result of real-time performance monitoring of the servers.

This algorithm requires performance monitoring to be enabled for the servers.

This algorithm works well when the type of traffic varies greatly from user to user and thefore performance of the servers are changing dynamically.

In this figure, at a certain point in time, Server1’s performance is better than Server2’s, so the incoming request at that time is forwarded to Server1.

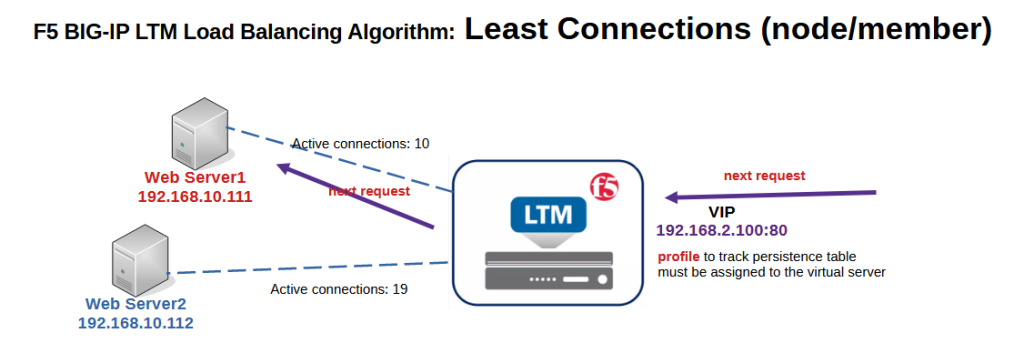

Least Connections (node/member):

In least connection algorithm, each incoming request is forwarded to the server with fewest active connections.

In this figure the next incoming session will be forwarded to the server1 since has fewer active connections.

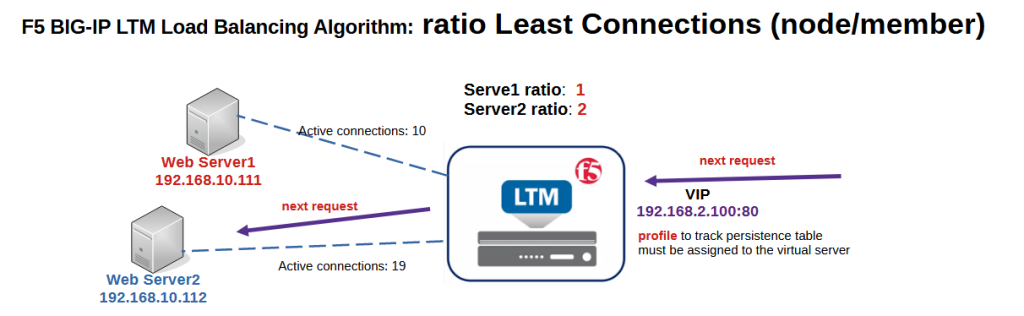

Ratio Least Connections (node/member):

The ratio least connections is the same as the least connections, but in addition to the number of active connections, the ratio of the node or pool member is considered.

In this figure, the number of active connections on Server1 is less than Server2, but the next request is forwarded to Server2 because the ratio of Server2 is twice that of Server1.

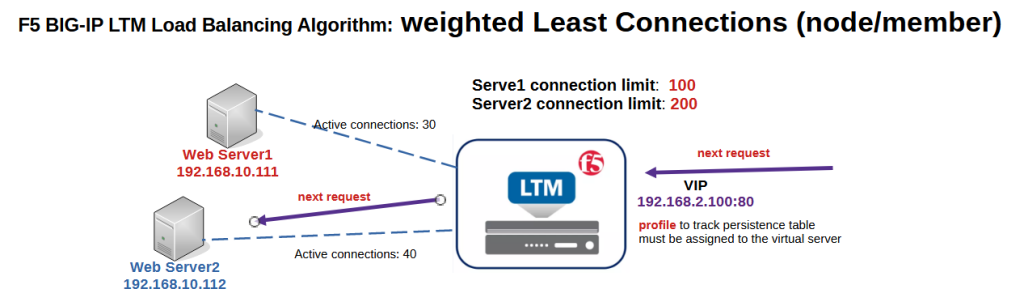

Weighted Least Connections (node/member):

The weighted least connections is the same as ratio least connections but instead of number of active connection and ratio, number of active connection and connection limit will be considered.

The connection limit is a parameter that can be configured at the node and pool member levels.

In this example, the connection limit for Server1 is configured to 100 and for Server2 to 200. The number of active connections in Server1 is 30% of the server capacity, but the number of active connections in Server2 is 20% of the capacity. Therefore, the next request is forwarded to Server2.

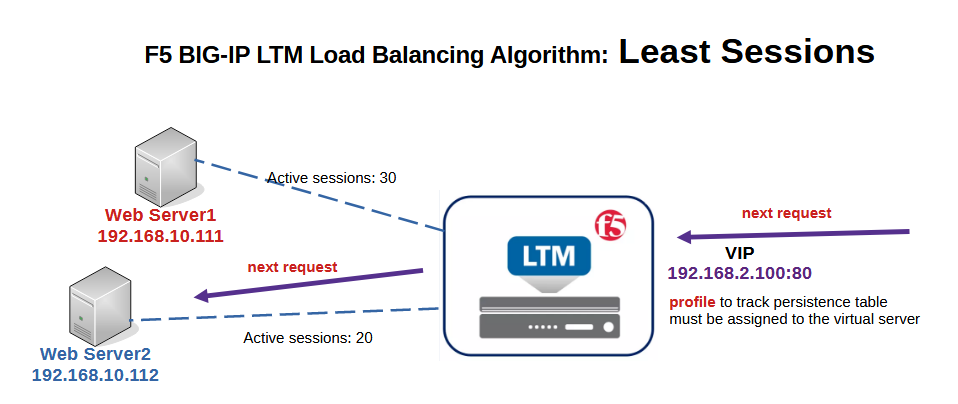

Least sessions:

The least session load balancing algorithm measures the number of active sessions with each server and the next request will be forwarded to the server with minimum number of active sessions.

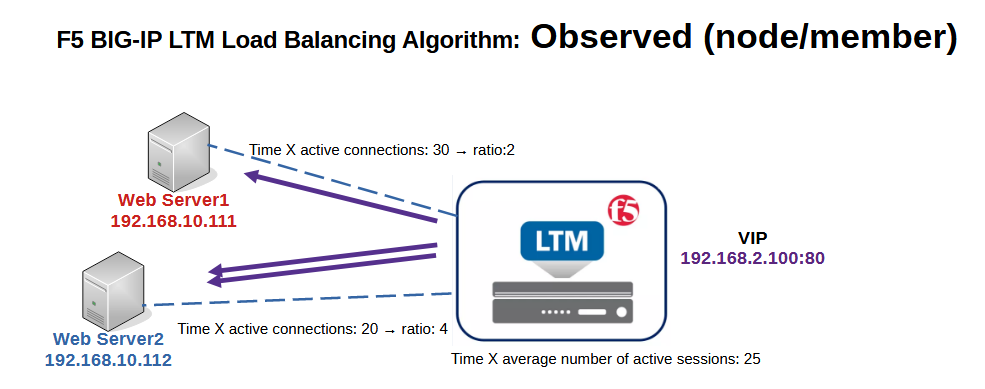

Observed (node/member):

The observed load balancing algorithm works like ratio and dynamic ratio algorithm and loads of requests are distributed proportionally to the ratio of nodes or pool members.

The difference is that the ratio is calculated dynamically every seconds and based on the number of active connections.

If the number of active connections for each server is greater than the average number of active connections, that server is assigned a smaller ratio value. On the contrary, if the number of active connections for each server is less than the average number of active connections, that server is assigned a larger ratio value.

In this figure, at a certain time, Server1 has 30 active connections and Server2 has 20 active connections. The average number of active connections is 25.

Therefore, Server1 has above-average active connections and Server2 has fewer than average active connections. Therefore, Server2 is assigned a higher value ratio and until the next second twice as many requests are forwarded to Server2 as to Server1.

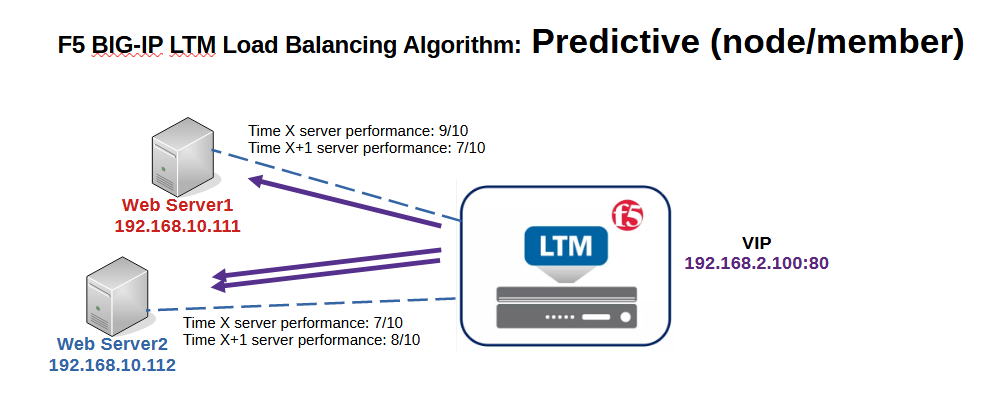

Predictive (node/member):

Predictive load balancing is similar to observed or dynamic ratio, meaning that each server is dynamically assigned a ratio every second and requests are loaded onto the server in proportion to their ratio value.

However, the ratio is assigned based on the performance trend and not based on the connection number as in the observed load balancing algorithm.

If a server’s performance increases compared to the previous second, a higher ratio value is assigned, and conversely, if server performance decreases, the server is assigned a lower ratio value.

In this figure, Server1’s performance is decreasing compared to the previous second and Server2’s performance is increasing. Therefore, at time X+1, Server2’s ratio is increased and Server1’s ratio is decreased.

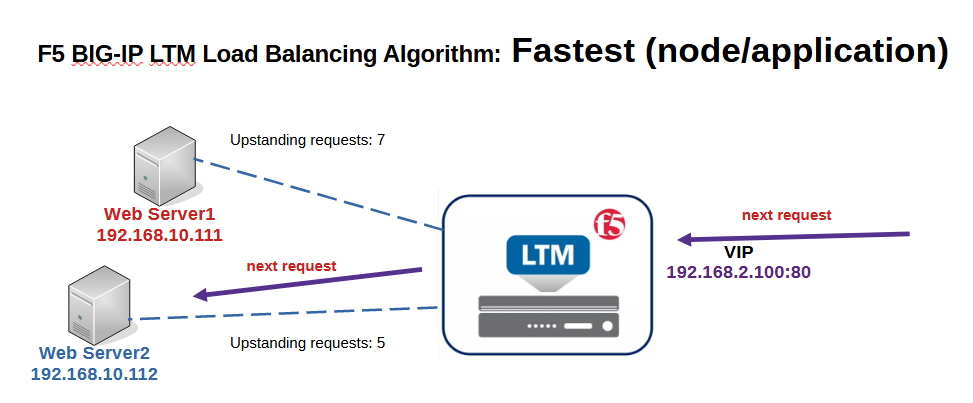

Fastest (node/application):

In the Fastest algorithm, BIG-IP counts the number of requests to each server that are not yet responded by the server. If there are a lot of requests coming to the server that are still not being responded, it means that the server is not fast enough.

This algorithm is used in environments where nodes are distributed across different logical networks.

In this algorithm, it is also required that an L7 service profile and a TCP profile be assigned to the virtual server.

In this algorithm, the next request is forwarded to the server that has the minimum number of unanswered requests. This means that this server is the fastest at this time.